Zijie Wu (Jarrent Wu)

Ph.D. Student in Computer Vision

Huazhong University of Science and Technology (HUST)

I am a third-year Ph.D. student supervised by Prof. Xiang Bai. My research interests include Image/Video/3D/4D Generation, and Motion Synthesis.

💼 Experience

Research Intern (Qingyun Plan)

Hunyuan3D, Tencent (腾讯混元3D)

Aug 2025 - Present

- Selected for the Tencent Qingyun Plan (腾讯青云计划), a top-tier talent program.

- Focusing on Mesh Animation and Motion Synthesis.

Research Intern

DAMO Academy, Alibaba Group (阿里巴巴达摩院)

May 2023 - Jul 2025

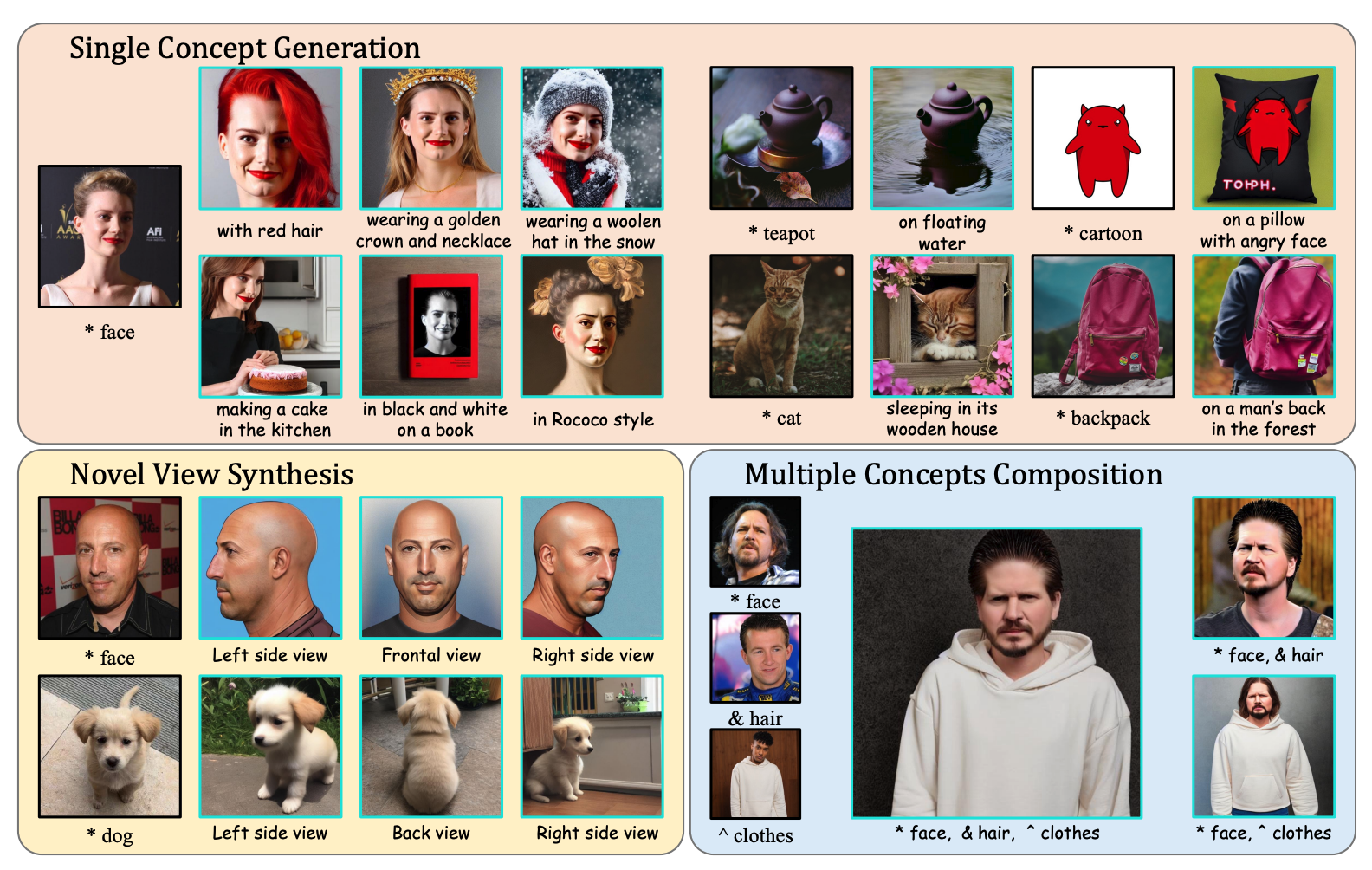

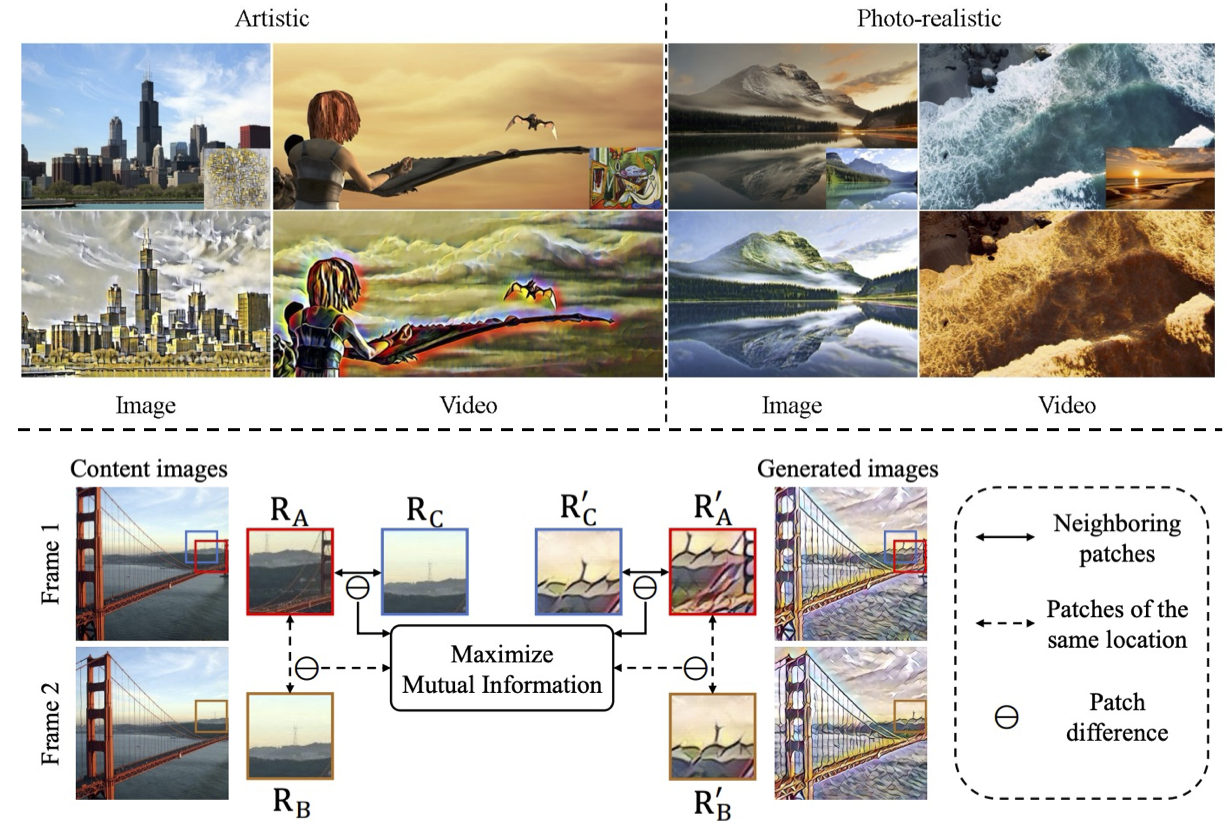

- Conducted research on 2D/3D/4D Generation.

- Published 2 papers at ECCV2024 and ICCV2025 as the first author.

📝 Selected Publications

* denotes equal contribution.