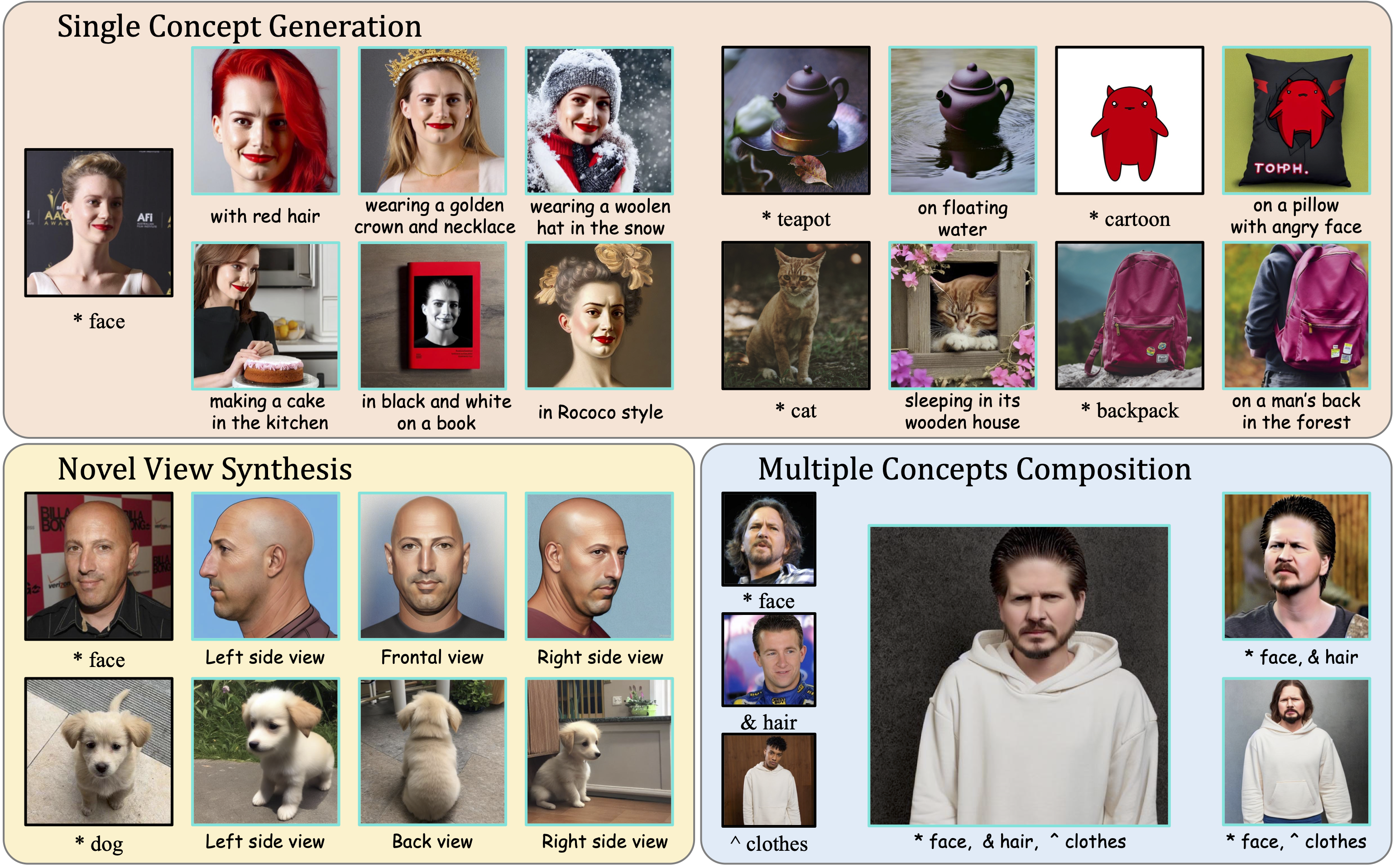

Three main applications of SingleInsert.

Recent progress in text-to-image (T2I) models enables high-quality image generation with flexible textual control. To utilize the abundant visual priors in the off-the-shelf T2I models, a series of methods try to invert an image to proper embedding that aligns with the semantic space of the T2I model. However, these image-to-text (I2T) inversion methods typically need multiple source images containing the same concept or struggle with the imbalance between editing flexibility and visual fidelity. In this work, we point out that the critical problem lies in the foreground-background entanglement when learning an intended concept, and propose a simple and effective baseline for single-image I2T inversion, named SingleInsert. SingleInsert adopts a two-stage scheme. In the first stage, we regulate the learned embedding to concentrate on the foreground area without being associated with the irrelevant background. In the second stage, we finetune the T2I model for better visual resemblance and devise a semantic loss to prevent the language drift problem. With the proposed techniques, SingleInsert excels in single concept generation with high visual fidelity while allowing flexible editing. Additionally, SingleInsert can perform single-image novel view synthesis and multiple concepts composition without requiring joint training. To facilitate evaluation, we design an editing prompt list and introduce a metric named Editing Success Rate (ESR) for quantitative assessment of editing flexibility.

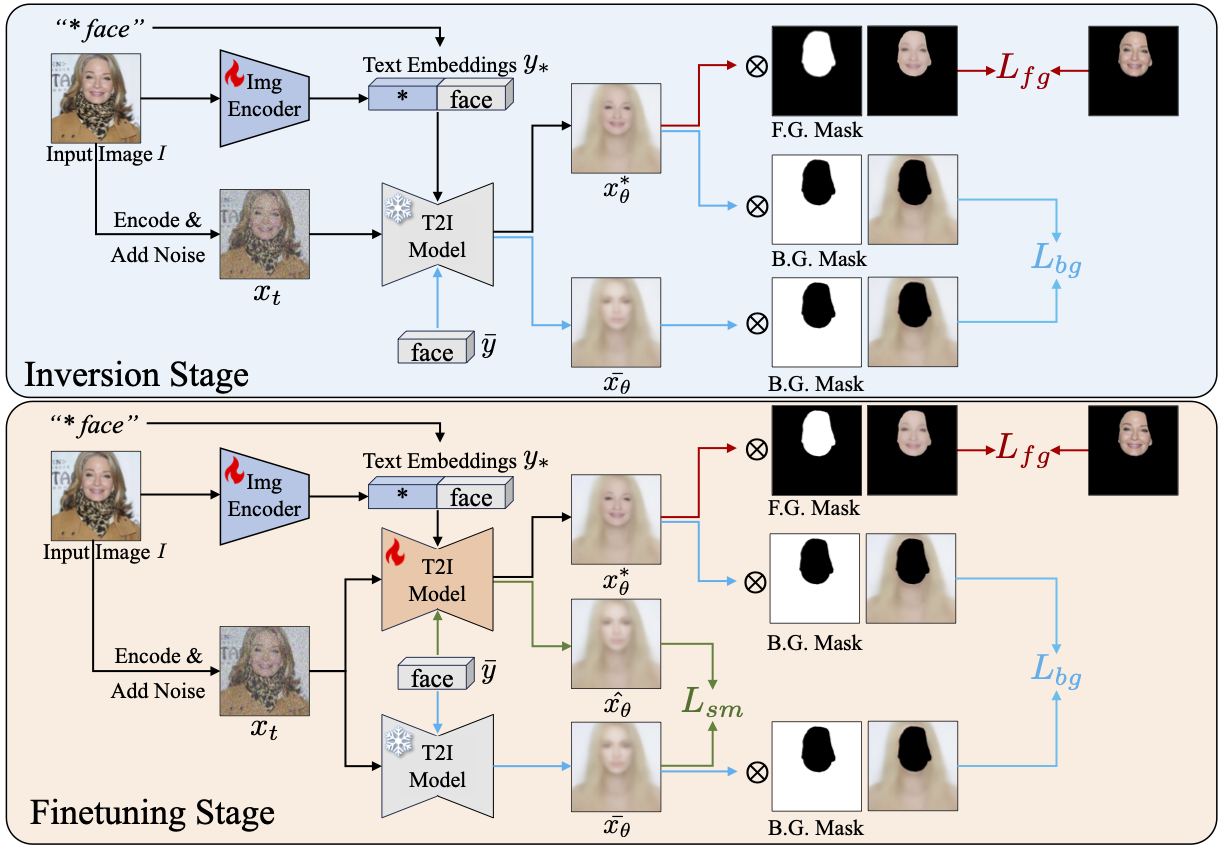

SingleInsert adopts a two-stage scheme as depicted below. In the inversion stage (stage I), we optimize the image-to-text encoder to invert the source image into a suitable embedding that restores the intended concept's identity while disentangling it from the irrelevant background. Subsequently, in the finetuning stage (stage II), we finetune the T2I model along with the image encoder to enhance visual fidelity without compromising editing flexibility. It is worth noting that our proposed semantic loss eliminates the need to generate class-specific datasets beforehand, thereby mitigating the "language drift" problem encountered in existing methods.

The two-stage pipeline of SingleInsert.

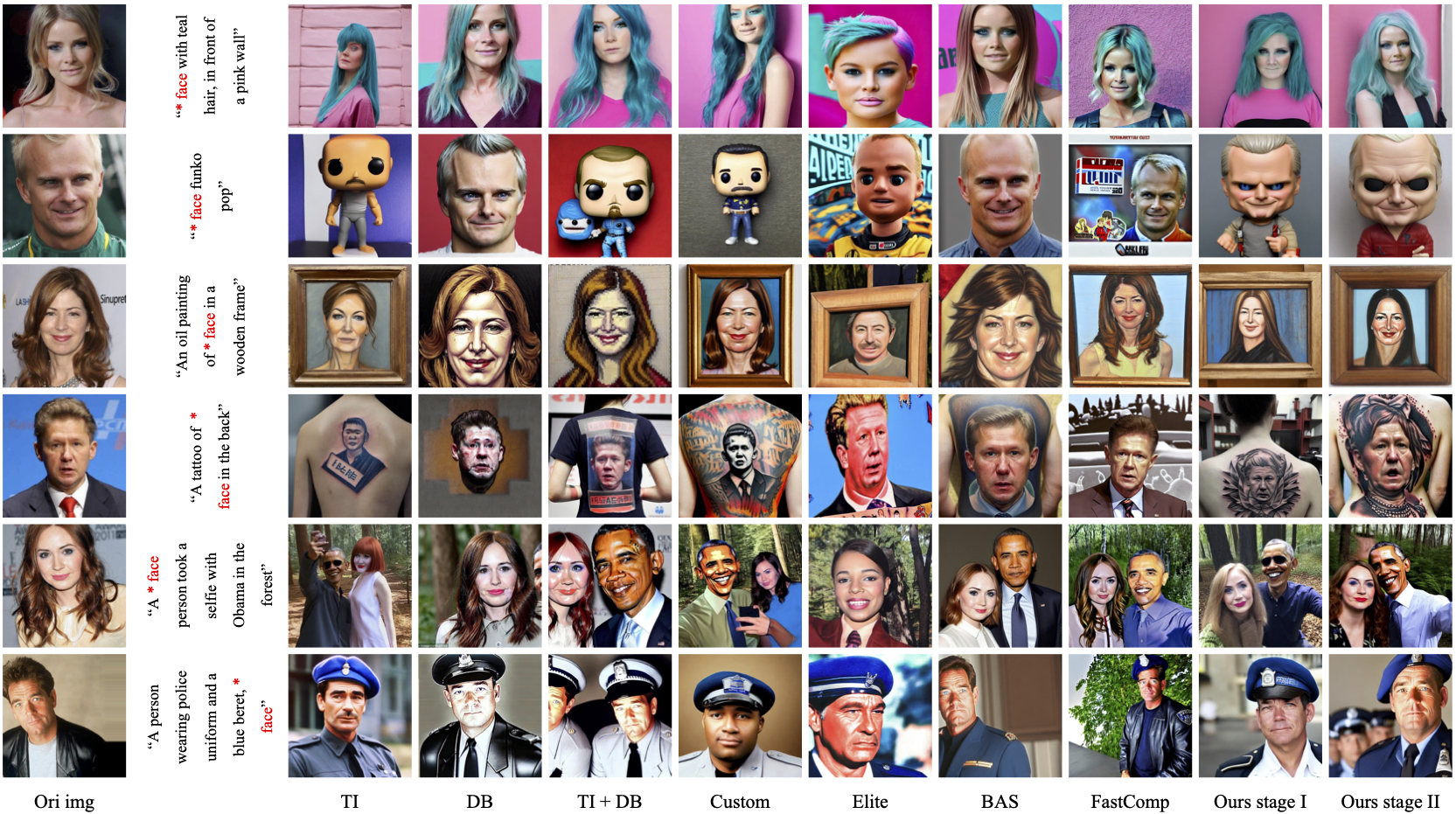

Comparisons on "face" class.

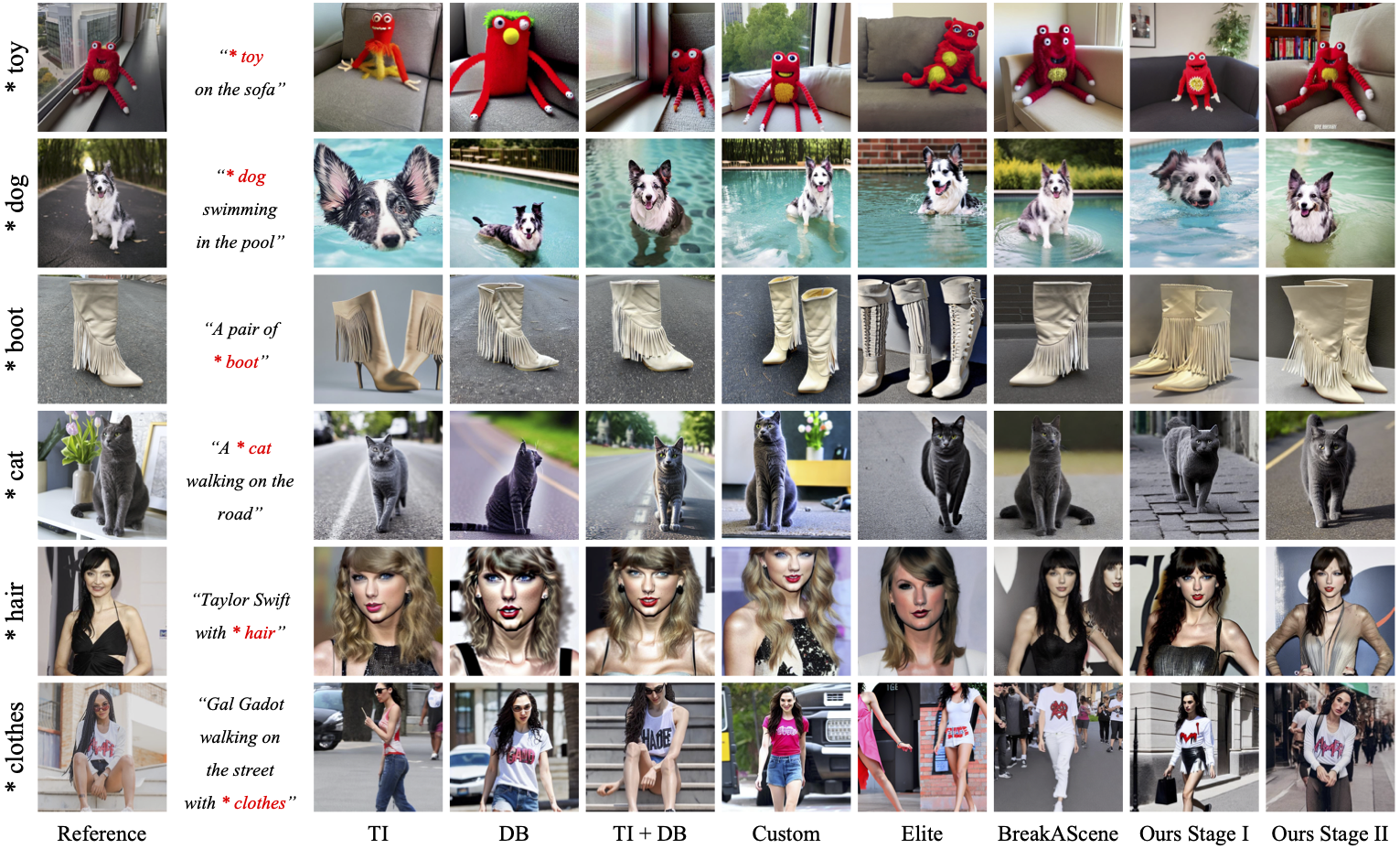

Comparisons in other categories.

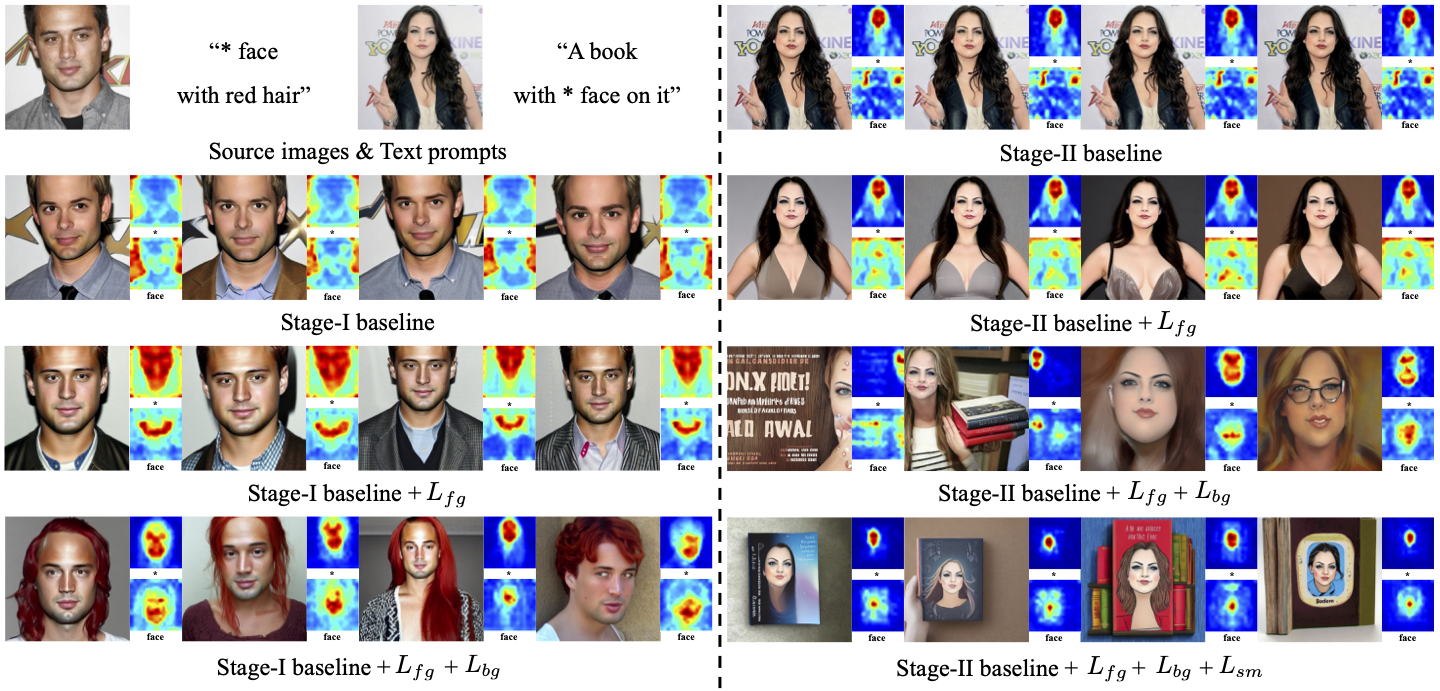

Ablations of the proposed three regularizations.

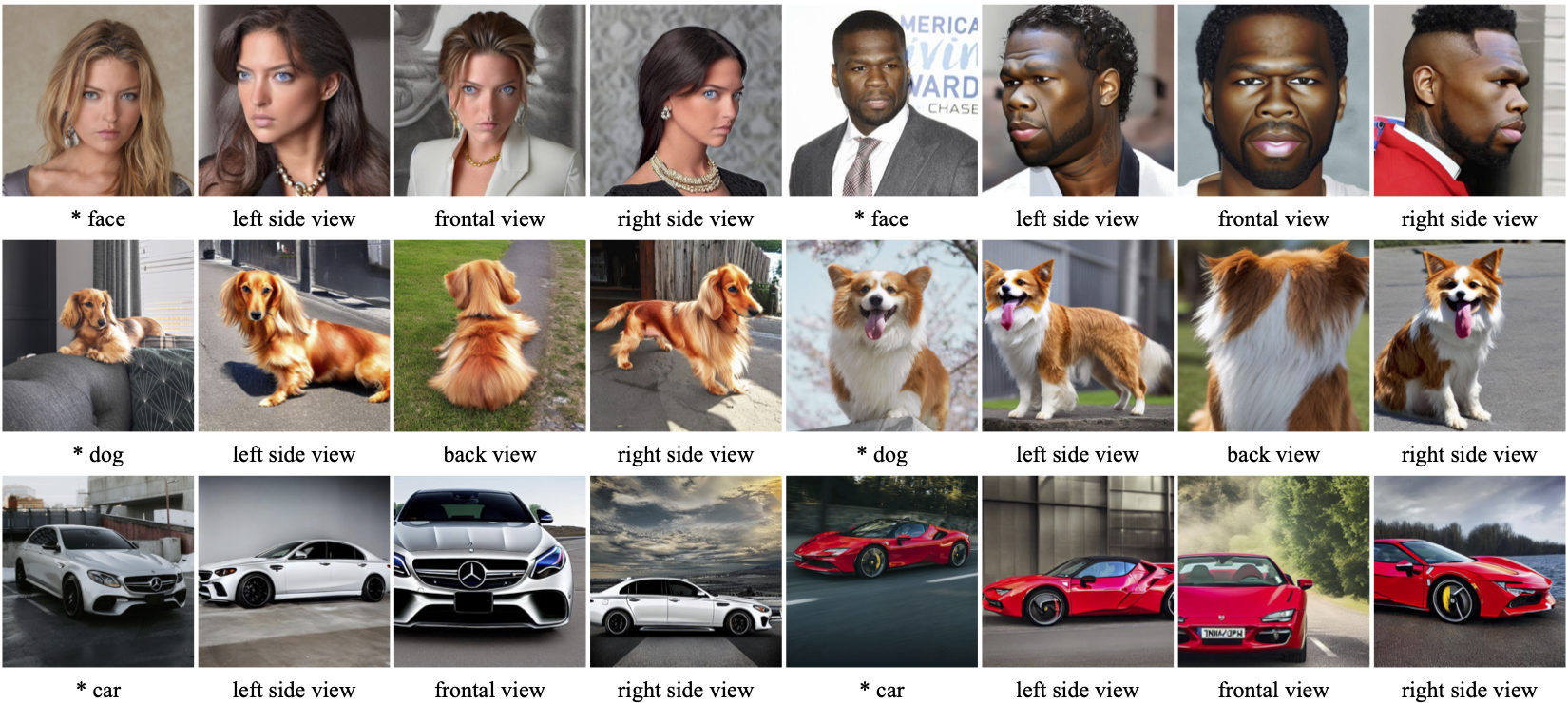

SingleInsert enables reasonable novel view synthesis.

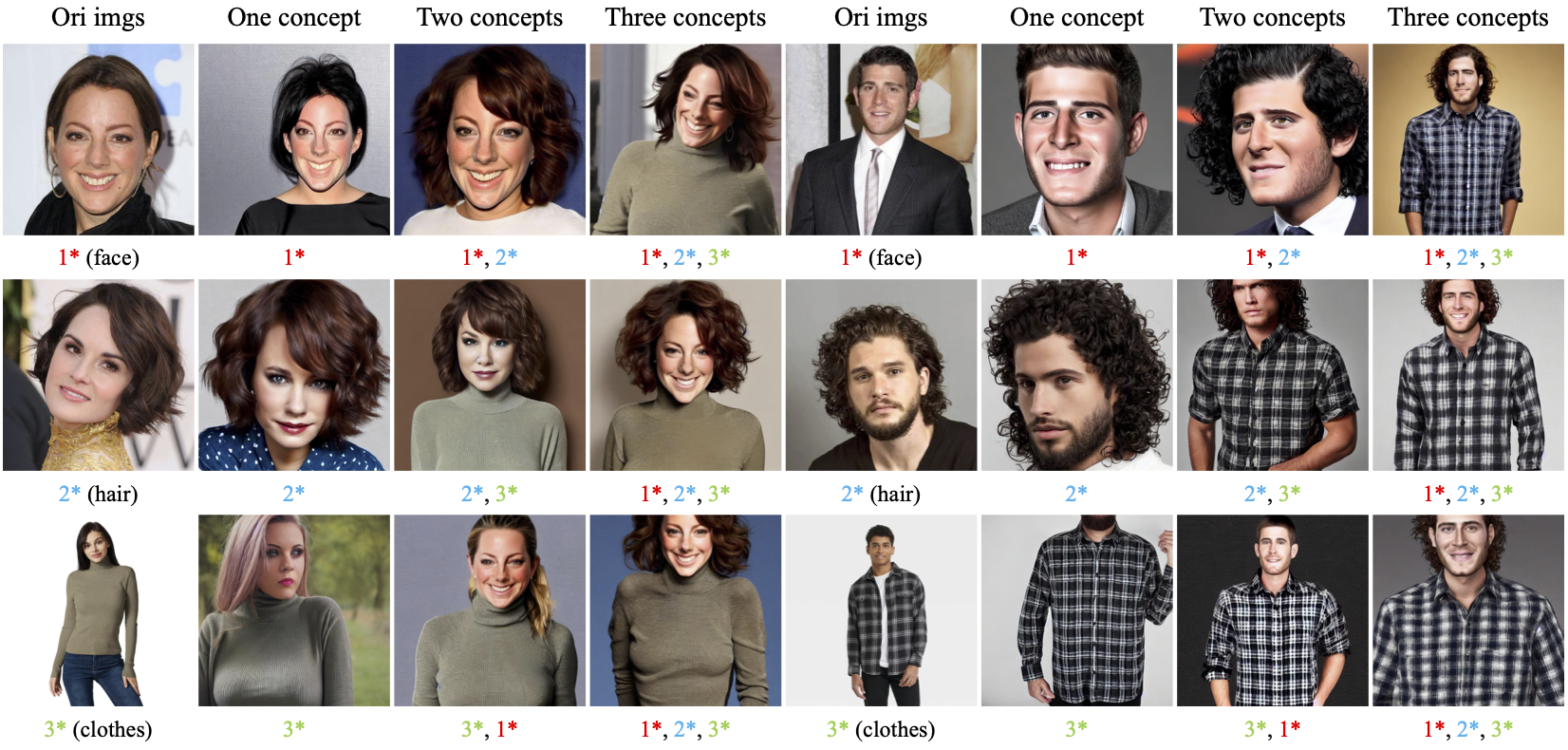

SingleInsert enables multiple concepts composition without the need for joint training.

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.